1 Introdution to Post-training Quantization

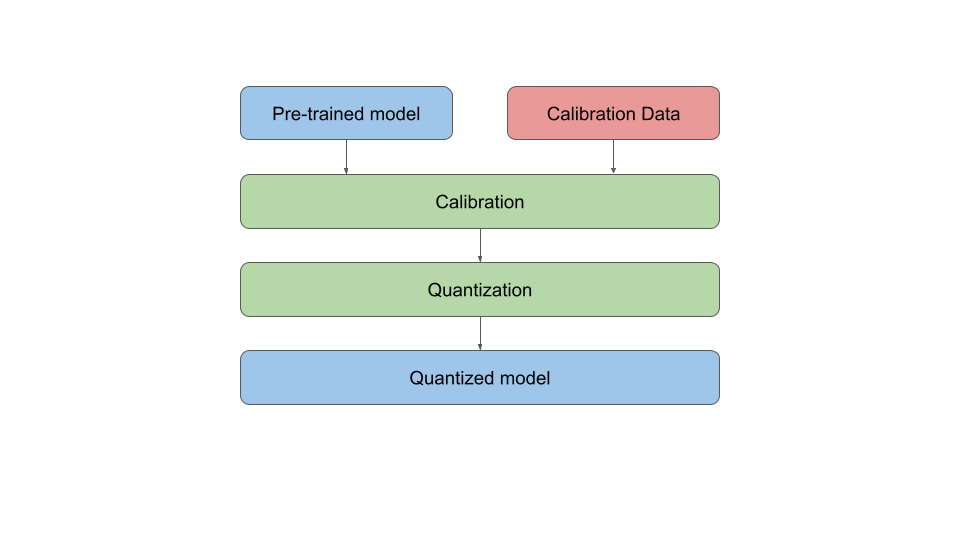

Post-training quantization(PTQ) uses a batch of calibration data to calibrate the trained model, and directly converts the trained FP32 model into a fixed-point computing model without any training on the original model. The quantization process can be completed by only adjusting a few hyperparameters, and the process is simple and fast without training. Therefore, this method has been widely used in a large number of device-side and cloud-side deployment scenarios. We recommend that you try the PTQ method to see if it meets the requirements.

Figure 1. PTQ Chart